In the rapidly evolving digital landscape, organizations are constantly seeking ways to enhance efficiency, gain insights, and secure a competitive edge. The emergence of Large Language Models (LLMs) presents a transformative opportunity to address these needs. These advanced AI tools, exemplified by innovations like ChatGPT, are trained on extensive datasets from across the internet, imbuing them with a remarkable range of capabilities from the outset.

The challenge and opportunity for businesses lie in leveraging these capabilities to perform specific functions tailored to their unique data and operational needs. Achieving this requires an increasing degree of technical sophistication and a strategic approach to data management and quality. This article outlines a crawl, walk, run framework for deploying LLMs across various levels of technical depth. By adopting this mindset, executives can progressively build their technical sophistication and data management capabilities, ultimately unlocking the full potential of LLMs to drive unprecedented efficiency, insight, and competitive advantage.

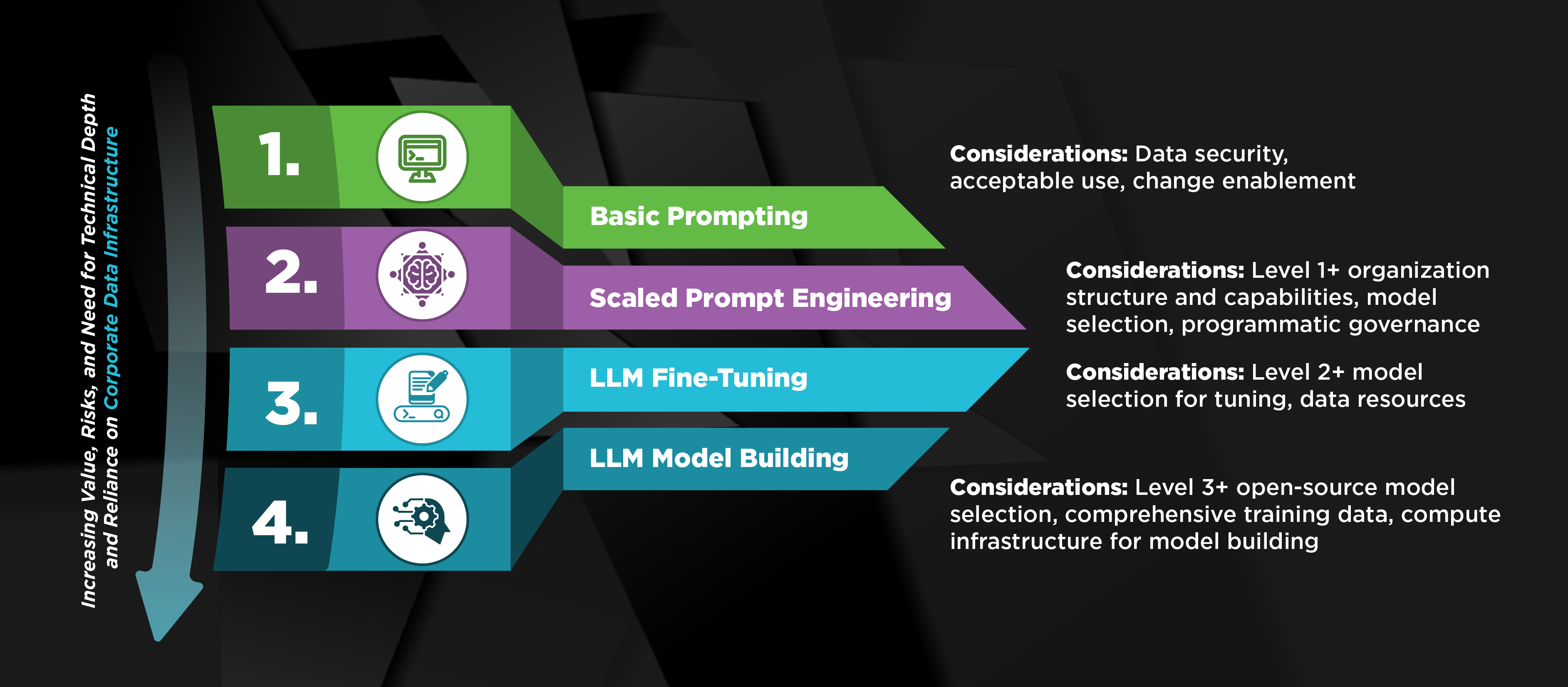

A Crawl, Walk, Run Framework for LLM Deployment

Level 1: Basic Prompting

The journey into LLM utilization begins with basic prompting. At this stage, virtually anyone within an organization can use AI tools to enhance job performance across a wide spectrum of tasks, requiring minimal upfront training. However, key considerations at this level include data security, ensuring acceptable use of technology, and preparing your organization for change.

Level 2: Scaled Prompt Engineering

As users gain familiarity with LLMs, the next phase involves prompt engineering. This process entails providing specific directions and examples to guide the AI toward desired outcomes. In addition to the considerations at Level 1, new concerns and risks emerge when implementing prompt engineering at scale. For instance, it necessitates greater subject matter expertise and technical depth within the organization to build applications that systematically call on LLMs and establish application/ programmatic governance to ensure effective and secure use of LLMs.

Level 3: LLM Fine-Tuning

Fine-tuning represents a more advanced application of LLMs, where specific examples are fed into the model to enhance its performance on tailored tasks (e.g., spare parts classified as critical and non-critical as inputs into a GenAI app focused on spare parts classification). In addition to the considerations at Level 2, this level combines domain expertise with technical know-how in data management, requiring considerations such as model selection and high-quality data resources (e.g., a few hundred to thousands of relevant examples, that are clean, represent the range of cases the model may encounter, and contain rich, detailed, and nuanced information to promote model understanding).

Level 4: Advanced Customization and Deployment

The most sophisticated stage involves training and deploying custom LLMs or AI models from scratch. In addition to the considerations at Level 3, this process demands extensive technical expertise, comprehensive high-quality training datasets (hundreds of GBs to TBs), and substantial computational resources. Additional considerations include selecting from different models to find the best fit for specific needs and objectives, as well as leveraging open-source models. These open-source models allow for deployment on-premises—potentially for highly confidential reasons—and the modification of code as necessary. Furthermore, building out a more robust data training environment is crucial to support more dynamic prompting, such as obtaining real-time data insights like “How many days of inventory do I have on hand?” These enhancements facilitate a more tailored and efficient use of AI technologies.

ScottMadden: Facilitating Your LLM Journey

Recognizing the potential and challenges of LLM deployment, ScottMadden offers a comprehensive suite of solutions designed to support organizations throughout their AI journey:

Security Governance Services: Ensuring the secure and ethical use of LLMs is foundational. Our services provide a robust framework for managing risks and complying with regulatory requirements, enabling businesses to leverage AI confidently and responsibly.

Intelligent Automation Services: By identifying processes that can benefit from automation and enhancing them with LLMs, ScottMadden helps organizations achieve operational efficiencies and drive innovation, ensuring that AI integration is aligned with strategic business objectives.

Change Enablement Services: The successful adoption of LLMs requires not just technological implementation but also organizational adaptation. Our change enablement services are designed to prepare teams, adapt processes, and cultivate a culture that embraces AI-driven transformation.

As LLM technology continues to evolve, the businesses that will thrive are those that not only adopt these tools but do so strategically and thoughtfully. By understanding the levels of LLM engagement and the considerations at each stage, corporate executives can better position their organizations to harness the full power of this disruptive technology. With ScottMadden’s expertise and solutions, your journey toward leveraging LLMs can be both successful and transformative, unlocking new levels of efficiency, insight, and competitive edge in the digital age.